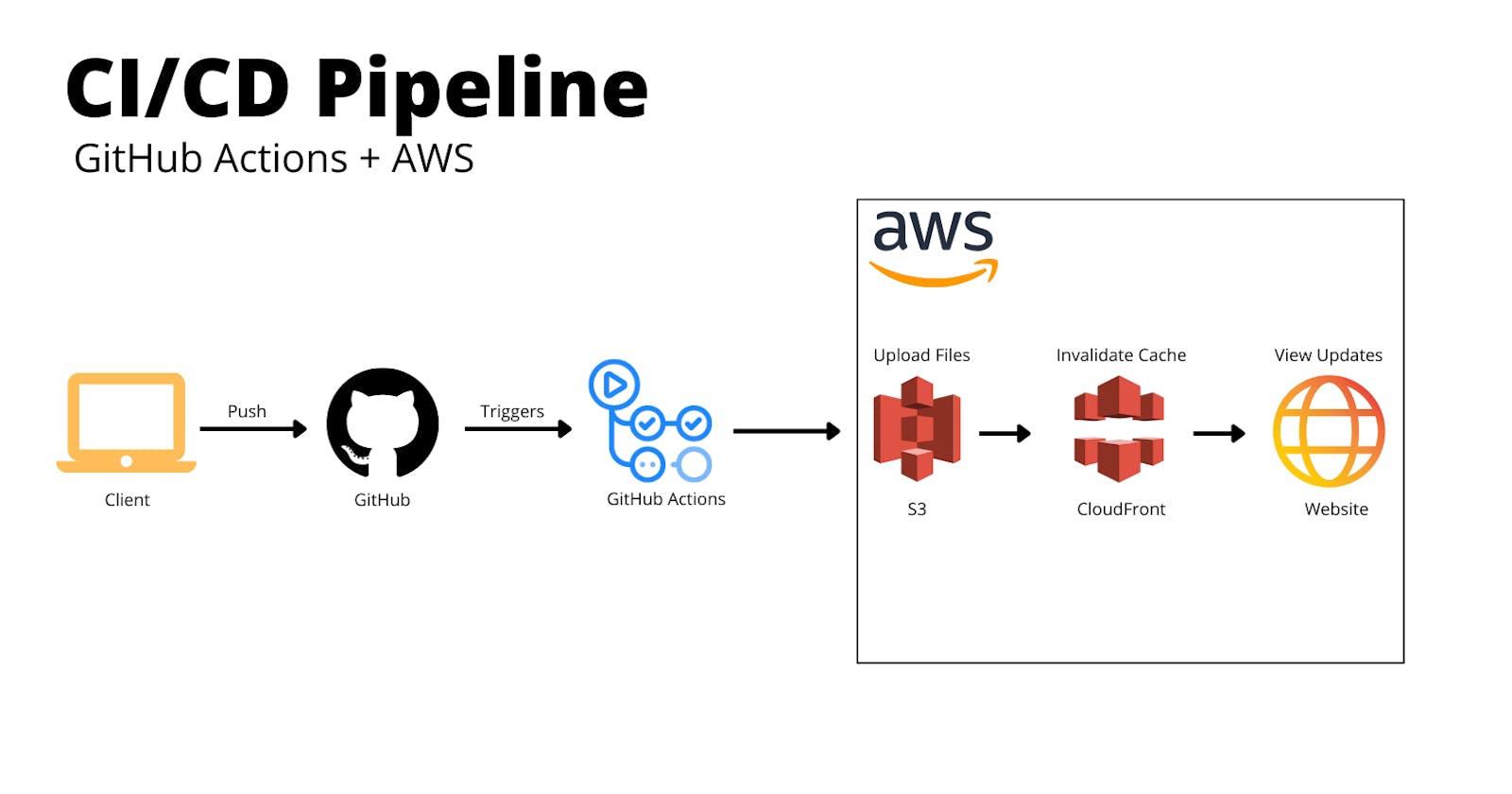

Create a CI/CD Pipeline with GitHub Actions and AWS

Learn how to create a CI/CD Pipeline with GitHub Actions to update a website on AWS with one command.

Table of contents

In my previous article: Hosting a Static Website with AWS - S3, Route 53 & CloudFront, I guided my wonderful readers on how to host their very own, secure and fast static website, but what if you wanted to make updates to it? The way I'd have to update it is to manually upload the files into the S3 bucket and manually create the invalidation certificate in CloudFront. The manual updating was getting old, so I sought out a solution with these parameters:

- Needs to be free...I'm still a poor graduate.

- Updates to my website should be deployed when I push to my GitHub repository.

Introducing GitHub Actions to the rescue!

What's GitHub Actions 🎬

GitHub Actions is a continuous integration and continuous delivery (CI/CD) platform that allows developers like ourselves to automate builds, testing and deployments through the creation of "workflows". For public repositories, GitHub Actions is completely free! This guide will be scratching the surface of what GitHub Actions can really provide, and I highly suggest checking the documentation out.

Workflows

Workflows are the bread and butter and they define the actions we want to perform through a YAML file that is completely customizable and can be attached to the repositories on your GitHub where you want them to work. The components that make up the workflows are:

- Events: Defines what triggers the workflow to run.

- Jobs: Defines the steps in the workflow.

- Actions: The functions performing the work.

- Runners: Servers that run the workflows.

Setting Up ⚒️

We will be using two public Actions found on the GitHub Marketplace. They act as an abstraction similar to a library over the the AWS Command Line Interface(CLI) to perform actions on your AWS account without having to write all the commands yourself.

- S3 Sync: Syncs your GitHub repository to your S3 bucket holding the content of your application/website.

- Invalidate AWS CloudFront: Performs invalidations in CloudFront.

Before we can get to using these Actions, we need to set up our environment so we can run smoothly.

Prerequisites

If you have not used AWS to host your own website, I highly recommend you read Hosting a Static Website with AWS - S3, Route 53 & CloudFront to get your website up and running in no time! For this guide, you'll need:

- GitHub Account with your website files in a PUBLIC repository.

- Git installed.

- AWS Account (Free Account Setup).

- Hosted website using S3 Buckets and CloudFront.

Step 1. Setting up an IAM User

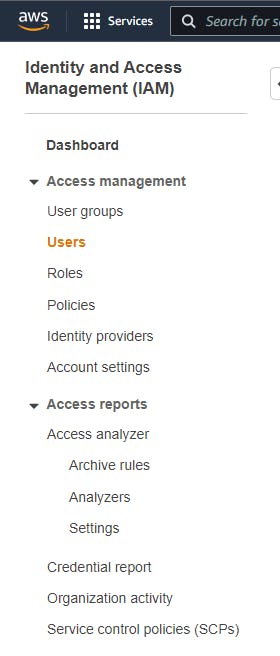

First, we need to set up an IAM user. IAM stands for "Identity and Access Management", and you can create an IAM user with finely tuned permissions. This is for the security of your account and it is recommended by AWS to create them for control over what an account to do with AWS services and resources.

Log into your AWS account and go to the IAM dashboard.

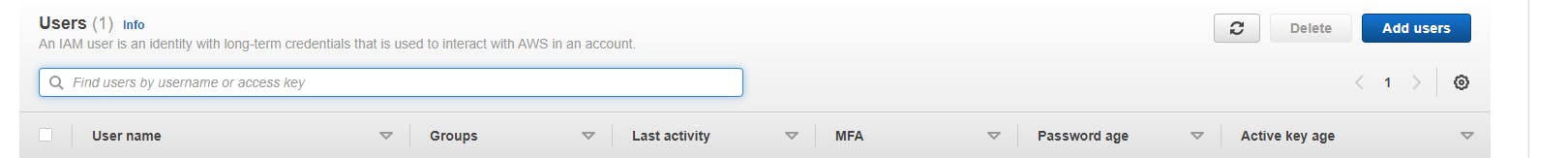

Click on Users.

Click Add Users.

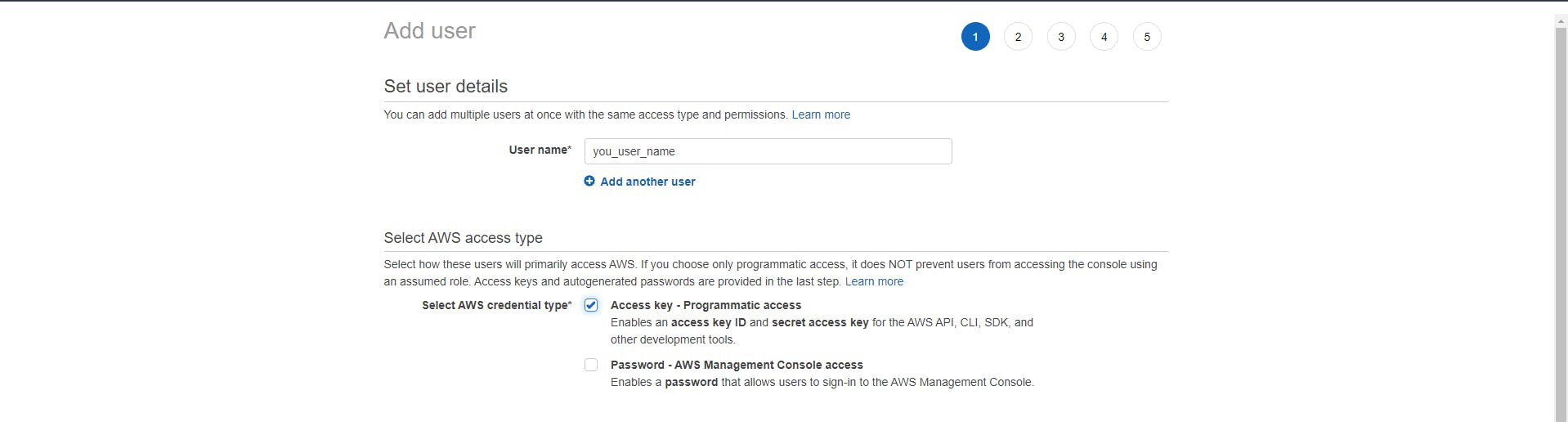

- Create a User name.

- Select the AWS credential type: Access key - Programmatic access and then click the "Next-Permissions" button.

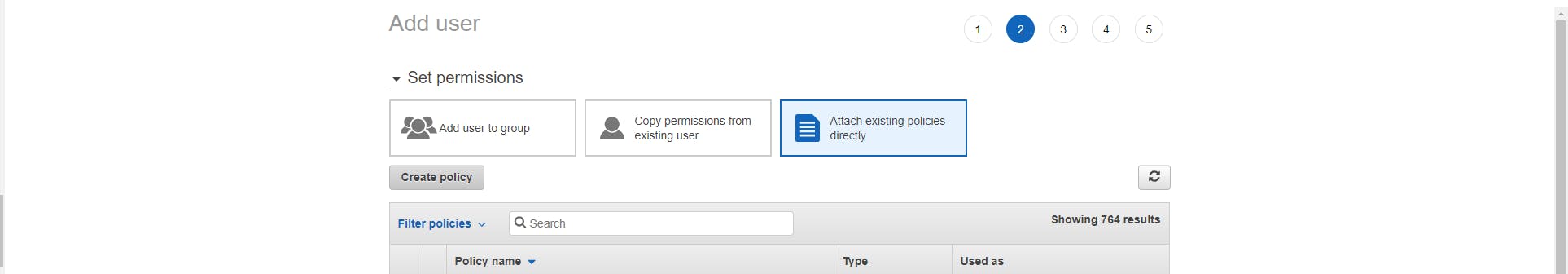

- Click on "Attach existing policies directly" and then Click "Create policy".

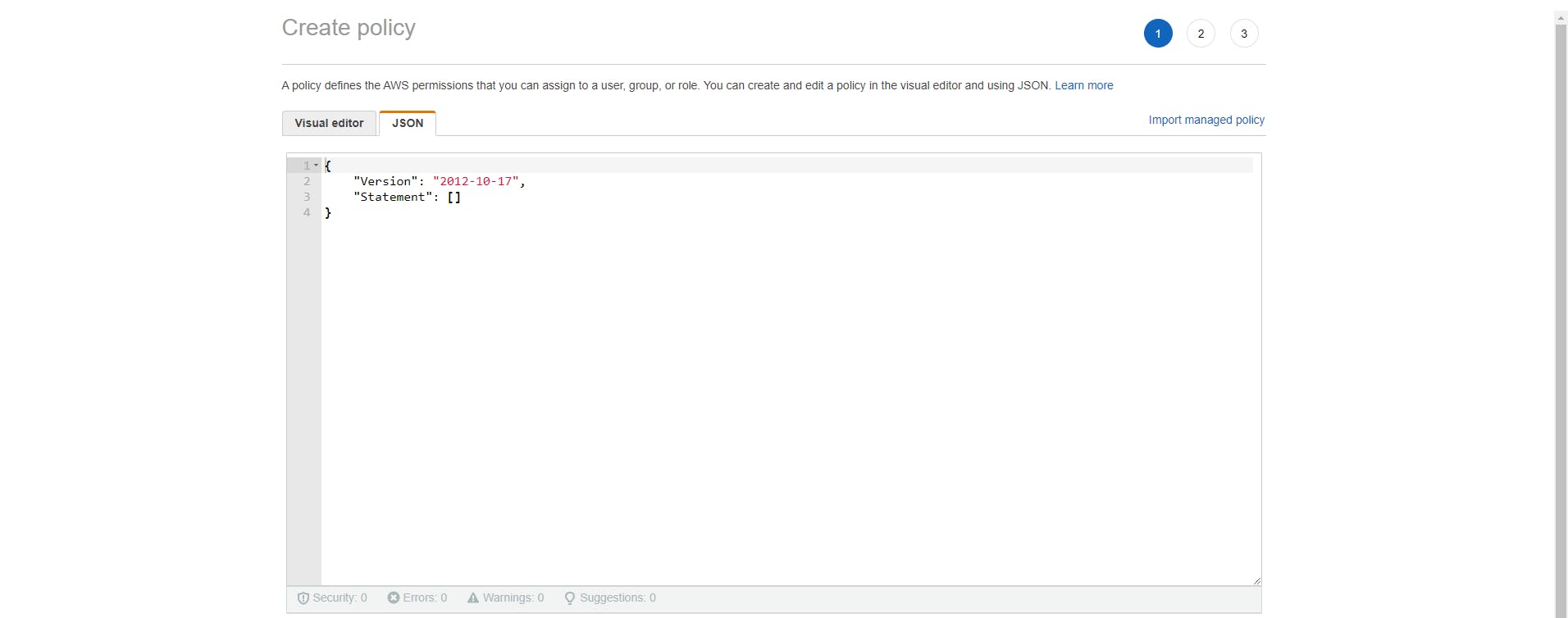

- Select "JSON".

- Add these permissions:

{ "Version": "2012-10-17", "Statement": [ { "Sid": "VisualEditor0", "Effect": "Allow", "Action": "cloudfront:CreateInvalidation", "Resource": "arn:aws:cloudfront::[your_distribution_id]" }, { "Effect": "Allow", "Action": [ "s3:*", "s3-object-lambda:*" ], "Resource": "*" } ] } - Replace the brackets that say "your_distrubition_id" with the ARN found in the "Details" section of your CloudFront distribution.

- Click "Next: Review"

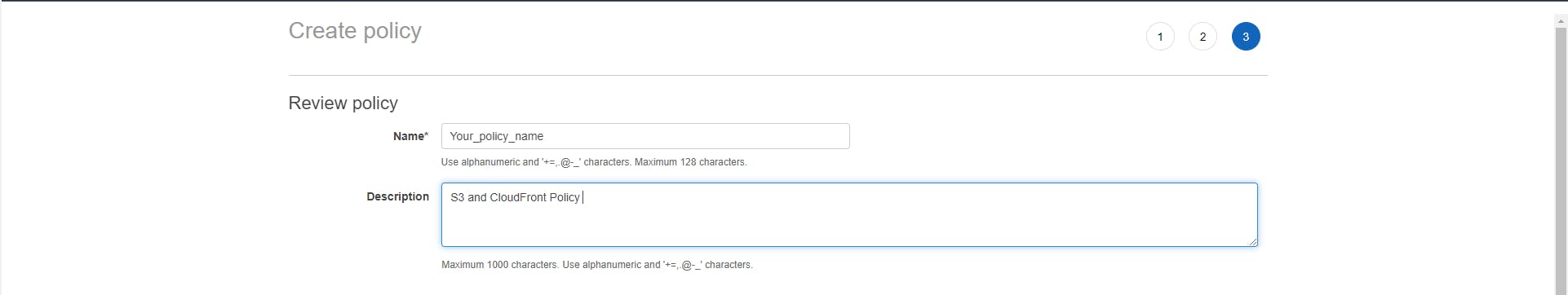

- Give your policy a name and a description and then click "Create Policy".

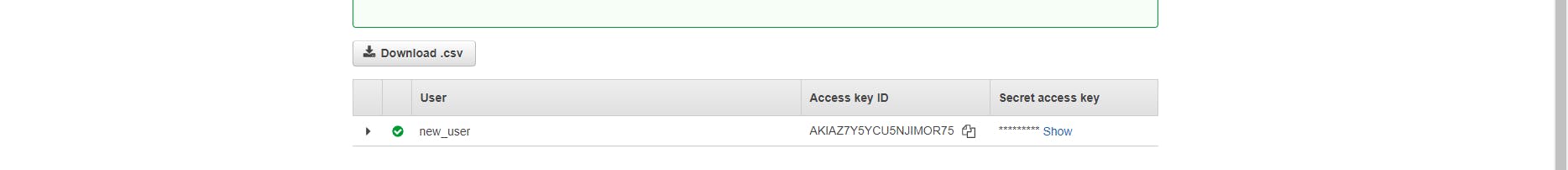

- Once you've created a user successfully, a screen will pop up with the Access Key Id and Secret Access Key. Save these somewhere safe for later!

Breakdown of the Policies

- The first policy statement is the cloudFront: CreateInvalidation action that specifies a CloudFront distribution that the IAM user is allowed to work on.

- The second policy statement allows the IAM user to perform all actions on any S3 bucket. If you'd like to specify only a single S3 bucket for your IAM user to perform actions on, I suggest checking out these docs: Bucket policies and user policies.

Step 2. Adding the .git/workflows Folder and YAML File

Workflows are stored in a .git/workflows folder in your repository, so we will create this folder on GitHub.

- Log into your GitHub account and go to the repository that will use the workflow.

- Click the "Add file" selector and choose "Create new file".

- In the "name your file" box, add

.github/workflows/[your-workflow.yml]. Replace the brackets with the name of your file and giving it the .yml file extension.

Step 3. Setting up GitHub Environment Variables

The workflow yaml file will contain sensitive information such as your:

AWS Access ID: This is the IAM User Access ID saved earlier.AWS Secret Access Key: This is the IAM User Secret Access Key saved earlier.AWS Distribution ID: This is the Distribution ID that is found under the "ID" column of your main Distributions page.AWS S3 Bucket Name: Name of your S3 bucket found under the "Name" column of your main Buckets page.

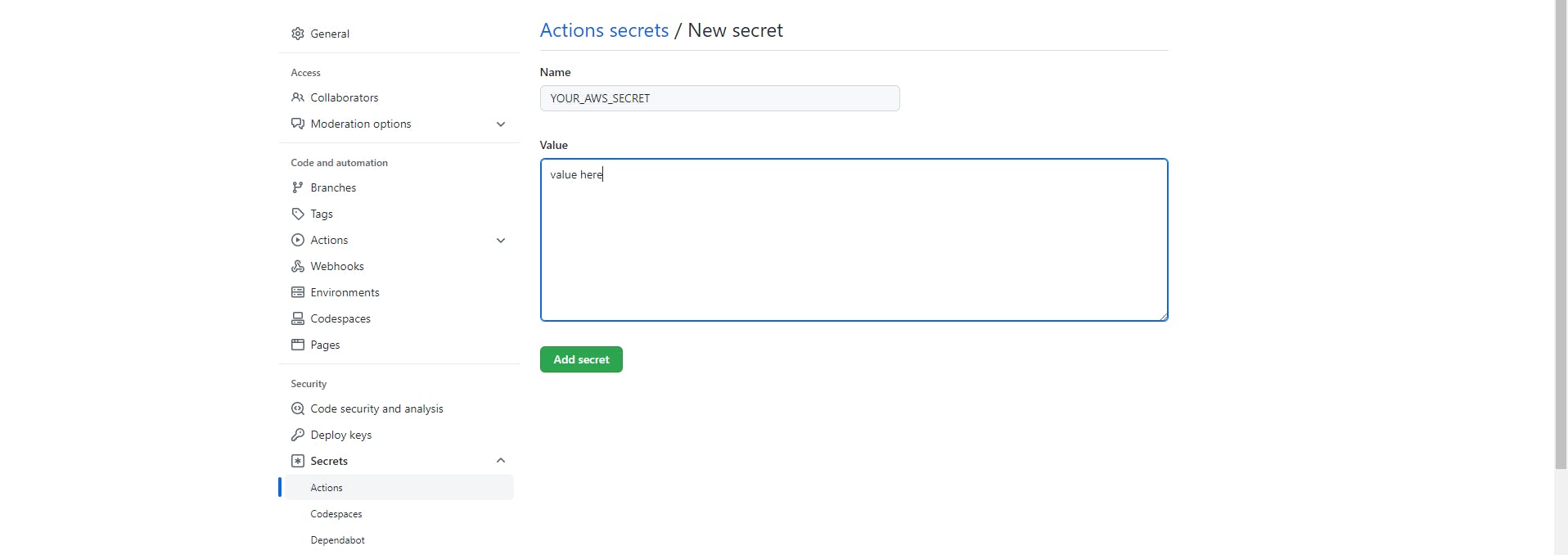

The way we can protect these from being viewed by the public is through environment variables, specifically "Repository Secrets" which are encrypted environment variables. Repeat the steps below for the four pieces of information above.

- In your repository, click "Settings".

- Scroll to the "Security" section, click the "Secrets" dropdown and select "Actions".

- Click "New repository secret".

- Add a "Secret Name" in the Name field. Example:

AWS_ACCESS_KEY_ID. - Add the information in the "Value" field.

- Click "Add secret".

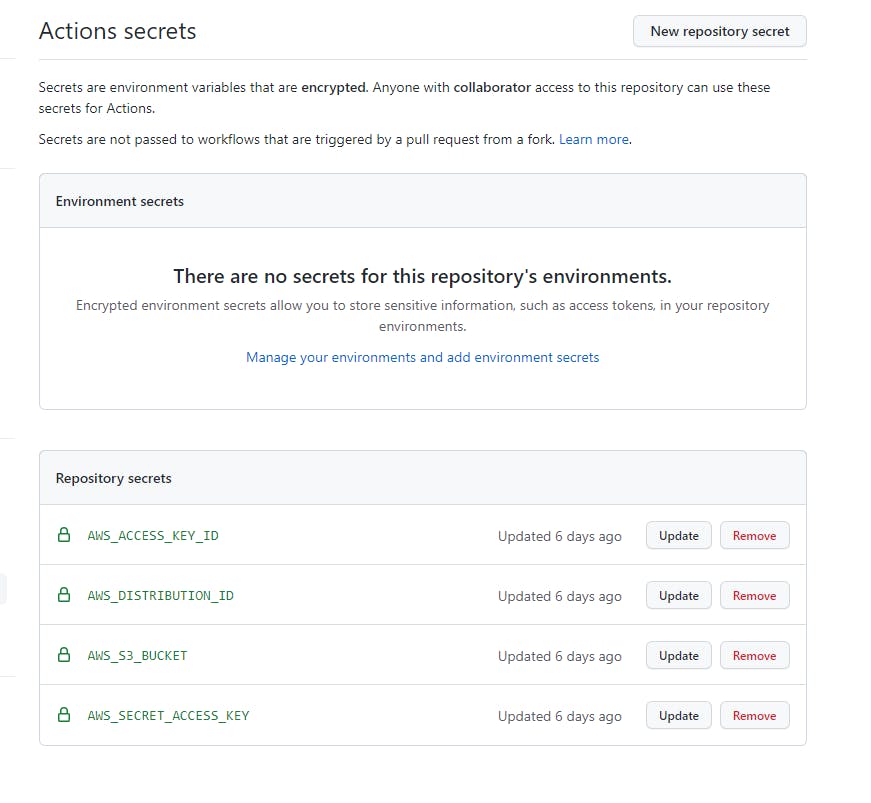

You should have 4 repository secrets and should look like this:

The YAML File 📁

Here is the complete YAML file we will be using. Copy this code and paste it into the YAML file created earlier.

- Under

branches:, enter the name of the branch you want to trigger this workflow. Since I push all my changes to my "main" branch, I wrote- main. - Under

uses: actions/checkout@v3 with:, replace "main" that is declared in thereftag with the name of the branch used. - Replace the secrets under both

envsections in the file with your secret key names.

name: Upload files for website and invalidate cache - AWS

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

with:

ref: main

- name: S3 Sync

uses: jakejarvis/s3-sync-action@master

with:

args: --acl public-read --follow-symlinks --delete

env:

AWS_S3_BUCKET: ${{ secrets.YOUR_S3_BUCKET_VARIABLE }}

AWS_ACCESS_KEY_ID: ${{ secrets.YOUR_ACCESS_KEY_ID_VARIABLE }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.YOUR_SECRET_ACCESS_KEY_VARIABLE }}

AWS_REGION: 'us-east-1'

- name: Invalidate CloudFront

uses: chetan/invalidate-cloudfront-action@v2

env:

DISTRIBUTION: ${{ secrets.YOUR_DISTRIBUTION_ID_VARIABLE }}

PATHS: "/*"

AWS_REGION: "us-east-1"

AWS_ACCESS_KEY_ID: ${{ secrets.YOUR_ACCESS_KEY_ID_VARIABLE }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.YOUR_SECRET_ACCESS_KEY_VARIABLE }}

Breakdown of the YAML File

At first glance, this file looks complicated but don't worry, I'm going to break this down into three digestible parts and go over each piece of code.

Part 1. Preliminary Info & Checkout Action

name: Upload files for website and invalidate cache - AWS

on:

push:

branches:

- main

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v3

with:

ref: main

name: Upload files for website and invalidate cache - AWS: This is just a top level declaration for the name of the workflow.on: push: branches: -main: This describes an event, where when I push to my "main" branch, this workflow will run. The branch can be any named branch in your repository.jobs: Workflows are made up of "jobs" and this tag sets up the runner, and steps (Actions) that will be performed. For this workflow, it will be ran on ubuntu and has the tag "latest" to specify the latest version. If you would like to run your application on a different runner, check out the docs: Choosing the runner for a job.steps: This tag holds all the steps (Actions) that will be ran.name: Optional tag that gives a name to the step that will be performed.uses: actions/checkout@v3: This specifies what action will be performed. Actions can be found in the Marketplace. This action checkouts the branch that will have the workflow work on.with: main: Specifies that I want the "main" branch checked out.

Part 2. S3 Sync Action

- name: S3 Sync

uses: jakejarvis/s3-sync-action@master

with:

args: --acl public-read --follow-symlinks --delete

env:

AWS_S3_BUCKET: ${{ secrets.YOUR_S3_BUCKET_VARIABLE }}

AWS_ACCESS_KEY_ID: ${{ secrets.YOUR_ACCESS_KEY_ID_VARIABLE }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.YOUR_SECRET_ACCESS_KEY_VARIABLE }}

AWS_REGION: 'us-east-1'

uses: jakejarvis/s3-sync-action@master: This is the S3 Sync action that will perform the updates to a specific S3 bucket.with: args: --acl public-read --follow-symlinks --delete: Adds arguments to this action which are completely optional.--acl public-read: Makes files publicly readable which is required for S3 static websites.--follow-symlinks: Fixes symbolic link problems.--delete: Deletes any files in the S3 bucket that are no longer present in the latest version of the website that was pushed.env: Declares the credentials and S3 bucket that will receive updates.AWS_S3_BUCKET:: Holds the ID of the bucket.AWS_ACCESS_KEY_ID:: Key ID of the IAM User.AWS_SECRET_ACCESS_KEY:: Access key of the IAM User.AWS_REGION: 'us-east-1': Specified region that the S3 bucket is hosted in. Since CloudFront is used, this is the only region that should be specified.

Part 3. Invalidate CloudFront Action

- name: Invalidate CloudFront

uses: chetan/invalidate-cloudfront-action@v2

env:

DISTRIBUTION: ${{ secrets.YOUR_DISTRIBUTION_ID_VARIABLE }}

PATHS: "/*"

AWS_REGION: "us-east-1"

AWS_ACCESS_KEY_ID: ${{ secrets.YOUR_ACCESS_KEY_ID_VARIABLE }}

AWS_SECRET_ACCESS_KEY: ${{ secrets.YOUR_SECRET_ACCESS_KEY_VARIABLE }}

uses: chetan/invalidate-cloudfront-action@v2: This is the Invalidate CloudFront action that will perform the cache invalidation.DISTRIBUTION:: The ID of the distribution that is serving your S3 bucket content.PATHS: "/*": This is the path of the objects you want to update in your S3 Bucket. The "/*" indicates that it will update all files in the cache.AWS_REGION: "us-east-1", AWS_ACCESS_KEY_ID:, AWS_SECRET_ACCESS_KEY:: These are the same values as in the S3 Sync action.

Running the Workflow

Now that all the secrets have been added to GitHub, we are ready to run the Workflow!

- Open up your IDE that has your website files, and is connected to your GitHub.

- Pull the repository into your files with Git since the workflows folder and YAML file has been added.

git pull origin [branch name] - Make some changes to your website files.

- Commit the changes:

git commit -m " [description of commit]" - Push your files:

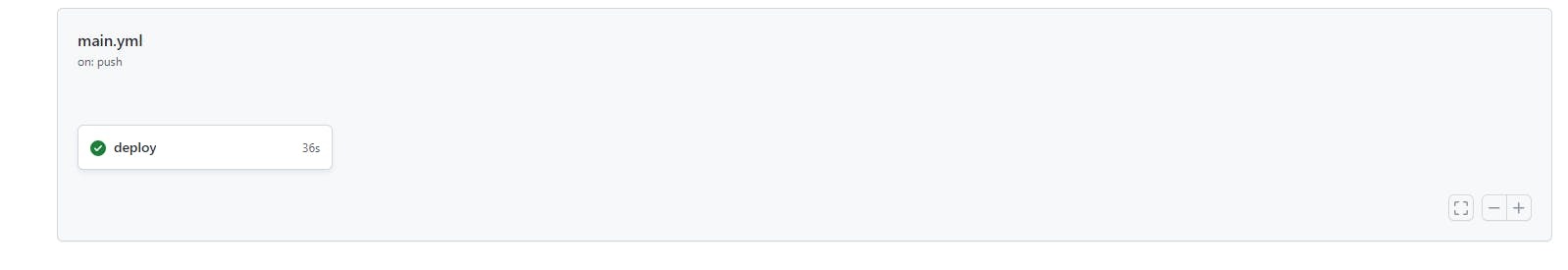

git push origin [branch name] - Go to your GitHub repository and click "Actions".

- Click on the workflow that is running.

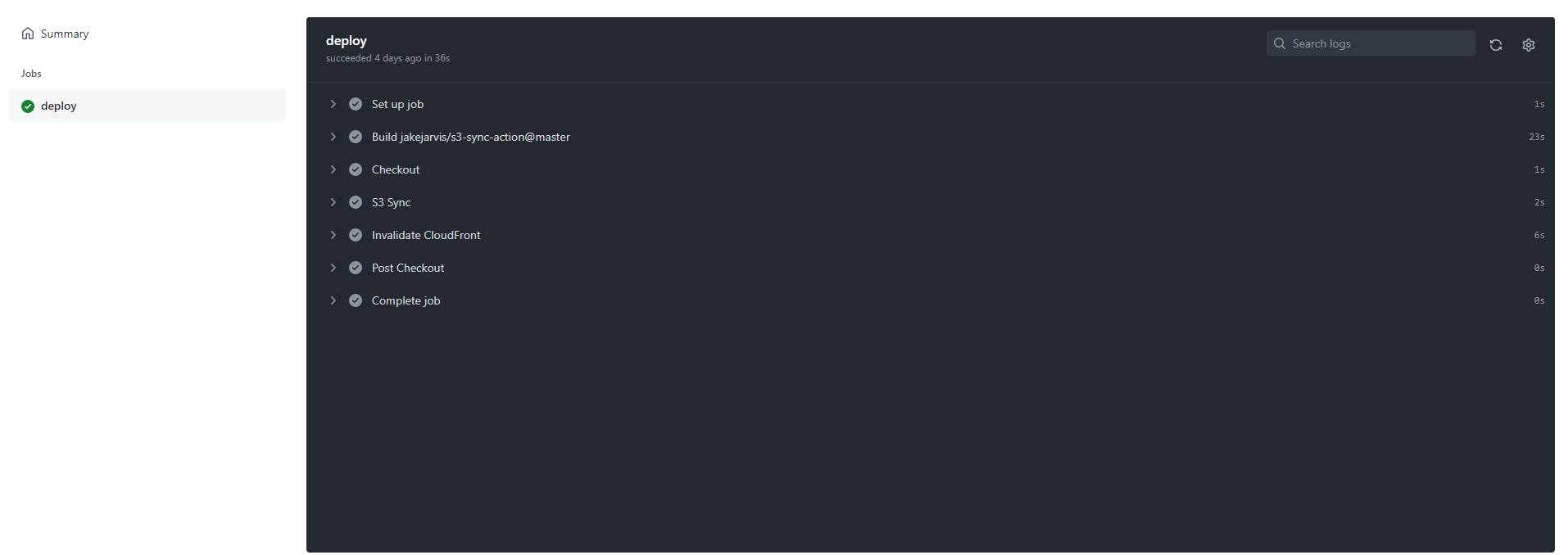

- Click on "deploy" to see the workflow running in action!

- If the job is successful, you should get checkmarks across the board ✔️✔️✔️

Conclusion 🔚

Congrats🎉🍾!! You now have a working CI/CD Pipeline that updates your AWS hosted website and deploys those updates automatically with a simple git push origin [branch]command. GitHub Actions are powerful and you could create quite an elaborate CI/CD Pipeline for your next project, so I encourage you to dive into the deep end and get to learning 🧠🤯!

I know that the set-up part of this guide was tedious, but I hope you found some worth in it and thank you so much for taking the time to read this. Subscribe to my newsletter so you can always see the latest Amazon Web Services Series article I publish. Next up will be a full tutorial on how to create a Spring Boot REST API and deploying it using Amazon Elastic Beanstalk!